Apply Phi model with HuggingFace Causal ML

HuggingFace is a popular open-source platform that develops computation tools for building application using machine learning. It is widely known for its Transformers library which contains open-source implementation of transformer models for text, image, and audio task.

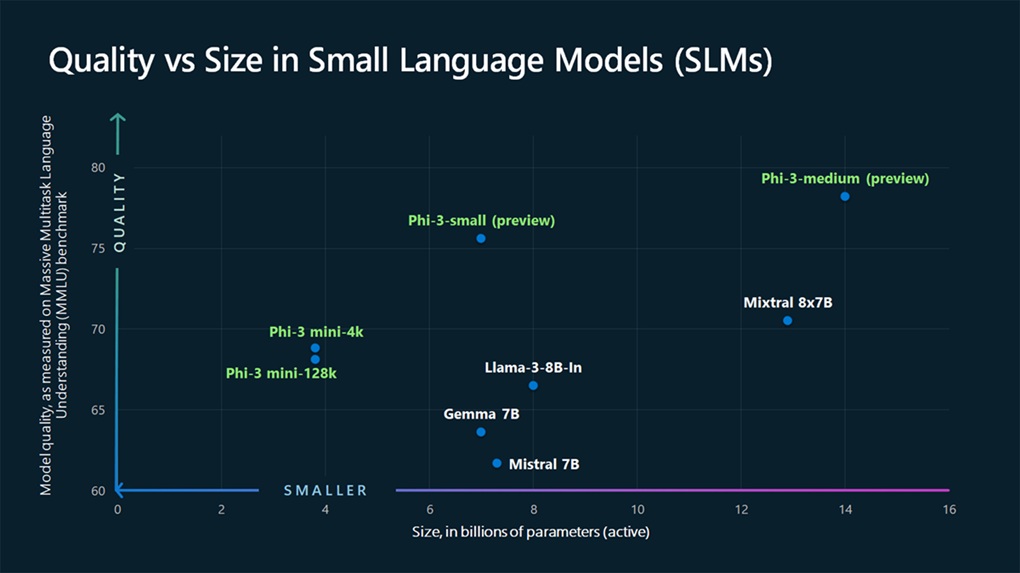

Phi 3 is a family of AI models developed by Microsoft, designed to redefine what is possible with small language models (SLMs). Phi-3 models are the most compatable and cost-effective SLMs, outperforming models of the same size and even larger ones in language, reasoning, coding, and math benchmarks.

To make it easier to scale up causal language model prediction on a large dataset, we have integrated HuggingFace Causal LM with SynapseML. This integration makes it easy to use the Apache Spark distributed computing framework to process large data on text generation tasks.

This tutorial shows hot to apply phi3 model at scale with no extra setting.

# %pip install --upgrade transformers==4.49.0 -q

chats = [

(1, "fix grammar: helol mi friend"),

(2, "What is HuggingFace"),

(3, "translate to Spanish: hello"),

]

chat_df = spark.createDataFrame(chats, ["row_index", "content"])

chat_df.show()

Define and Apply Phi3 model

The following example demonstrates how to load the remote Phi 3 model from HuggingFace and apply it to chats.

from synapse.ml.llm.HuggingFaceCausallmTransform import HuggingFaceCausalLM

phi3_transformer = (

HuggingFaceCausalLM()

.setModelName("microsoft/Phi-3-mini-4k-instruct")

.setInputCol("content")

.setOutputCol("result")

.setModelParam(max_new_tokens=100)

)

result_df = phi3_transformer.transform(chat_df).collect()

display(result_df)

Use local cache

By caching the model, you can reduce initialization time. On Fabric, store the model in a Lakehouse and use setCachePath to load it.

# %%sh

# azcopy copy "https://mmlspark.blob.core.windows.net/huggingface/microsoft/Phi-3-mini-4k-instruct" "/lakehouse/default/Files/microsoft/" --recursive=true

# phi3_transformer = (

# HuggingFaceCausalLM()

# .setCachePath("/lakehouse/default/Files/microsoft/Phi-3-mini-4k-instruct")

# .setInputCol("content")

# .setOutputCol("result")

# .setModelParam(max_new_tokens=1000)

# )

# result_df = phi3_transformer.transform(chat_df).collect()

# display(result_df)

Utilize GPU

To utilize GPU, passing device_map="cuda", torch_dtype="auto" to modelConfig.

phi3_transformer = (

HuggingFaceCausalLM()

.setModelName("microsoft/Phi-3-mini-4k-instruct")

.setInputCol("content")

.setOutputCol("result")

.setModelParam(max_new_tokens=100)

.setModelConfig(

device_map="cuda",

torch_dtype="auto",

)

)

result_df = phi3_transformer.transform(chat_df).collect()

display(result_df)

Phi 4

To try with the newer version of phi 4 model, simply set the model name to be microsoft/Phi-4-mini-instruct.

phi4_transformer = (

HuggingFaceCausalLM()

.setModelName("microsoft/Phi-4-mini-instruct")

.setInputCol("content")

.setOutputCol("result")

.setModelParam(max_new_tokens=100)

.setModelConfig(

device_map="auto",

torch_dtype="auto",

local_files_only=False,

trust_remote_code=True,

)

)

result_df = phi4_transformer.transform(chat_df).collect()

display(result_df)